The executive order is an attempt to comprehensively address the fears surrounding AI technology while aligning with President Biden’s vision for AI development

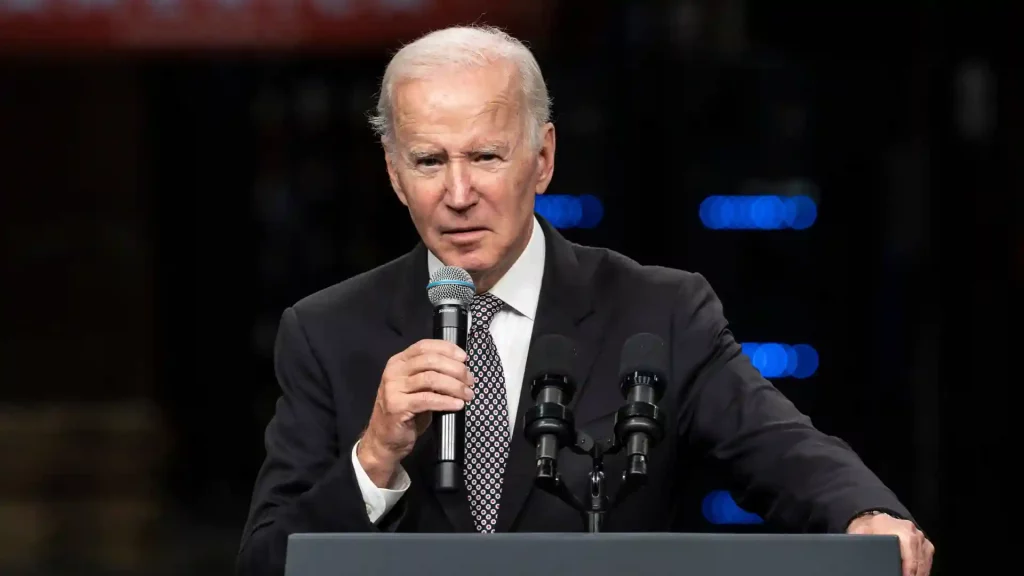

In response to the widespread popularity of generative AI systems like ChatGPT, the call for strong AI regulation has grown louder, voicing concerns about the technology’s disruptive potential and job losses, warfare, and privacy risks. To this end, President Joe Biden on Monday signed a landmark executive order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. This order attempts to channel the development of AI through the lens of safety, security, and responsible use.

Joe Biden, in a short speech before signing the order, expressed how important he felt this was, saying, “This landmark executive order is a testament to what we stand for safety, security, trust, openness, American leadership, and the undeniable rights endowed by a creator that no creation can take away. We face a genuine inflection point, one of those moments where the decisions we make in the very near term are going to set the course for the next decades… There’s no greater change that I can think of in my life than AI presents.”

What do the new regulations stipulate?

The executive order is an attempt to comprehensively address the fears surrounding AI technology while aligning with President Biden’s vision for AI development. As of now, many provisions within the order currently lack the force of law and will largely depend on how the involved federal agencies implement them, potentially facing legal challenges in the Supreme Court as well.

The executive order directs federal agencies and departments to create standards and regulations for AI across sectors such as criminal justice, education, healthcare, housing, and labour. The focus is on protecting Americans’ civil rights and liberties while still encouraging the responsible development of AI. The order also invokes the Defense Production Act to ensure companies notify the federal government when training AI models that pose national security risks.

Furthermore, the National Institute of Standards and Technology is to establish testing standards for AI companies to follow, while the Departments of Energy and Homeland Security will evaluate the risks of misuse in developing biological or nuclear weapons. Concerns regarding AI’s potential to allow the creation of dangerous chemical, biological, or nuclear weapons are also addressed. The Department of Homeland Security will assess AI’s role in producing such threats, and the Department of Defense will recommend measures to mitigate biosecurity risks.

One specific concern is the use of AI to generate synthetic nucleic acids, which can be used for genetic engineering. To address this, the Office of Science and Technology Policy will collaborate with various departments in screening and monitoring synthetic nucleic acid procurement. The Department of Commerce will create rules and best practices for screening synthetic nucleic acid sequence providers.

It’s just the beginning

The order also contains provisions for safeguarding Americans’ privacy, although it acknowledges that true privacy protection requires federal legislation, calling on Congress to pass such laws. AI’s ability to create deepfakes, which are nearly indistinguishable from human-made content, is a top concern. The order calls for the Department of Commerce to issue guidance on best practices for detecting AI-generated content.

Also, despite its comprehensiveness, the executive order is not the sole action the government plans to take, with the legislative branch also reportedly preparing new laws. While the Biden administration touts this executive order as a groundbreaking step toward AI safety, other countries have already taken stronger action, such as the European Union‘s AI Act. This executive order is just the latest in a global movement to regulate AI, and Vice President Kamala Harris plans to participate in international summits on AI regulation to coordinate global AI action.

As to whether the executive order can satisfy all stakeholders, for now, it has been met with cautious optimism. Microsoft president Brad Smith called it a critical step forward, while digital rights advocacy groups also view it as a positive first step, advocating a wait-and-see approach regarding its implementation